Interactive Image Processing Graphs

Here you can explore a few Desmos graphs featuring gamma correction (using power law) and Linear Contrast Stretching.

Interactive Image Processing Graphs

Here you can explore a few Desmos graphs featuring gamma correction (using power law) and Linear Contrast Stretching.

Interactive Image Processing Graphs

Here you can explore a few Desmos graphs featuring gamma correction (using power law) and Linear Contrast Stretching.

Here you can explore several Desmos graphs featuring distributions, permutations/combinations, and regression. For the best experience, view these graphs on a computer rather than a phone.

Here you can explore several Desmos graphs featuring distributions, permutations/combinations, and regression. For the best experience, view these graphs on a computer rather than a phone.

Here you can explore several Desmos graphs featuring distributions, permutations/combinations, and regression. For the best experience, view these graphs on a computer rather than a phone.

Multiple Linear Regression

Introduction and Background

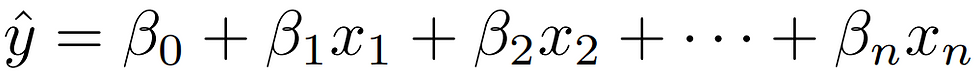

Multiple Linear Regression is a method of approximating the relationship of a dataset with some dimensional hyperplane. For instance, if the dataset had n independent variables and one dependent variable then we would use a nD hyperplane of the following form

which best fits the dataset. This is done by minimizing the sum of square errors of the hyperplane and the dataset. A quick derivation can be seen below.

Examples

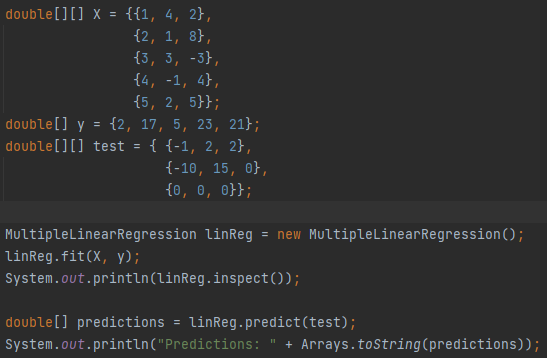

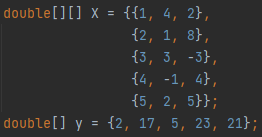

- Creating a Dataset

The first thing you will need is a dataset that will be used to compute the hyper plane for the multiple linear regression model. We create two arrays, X and y, which will be the features and targets respectively for the multiple linear regression model. Each row of the X array contains a single input for the hyper plane and the columns contain the values of each variable. These arrays can hold any data you want but for this example we will stick with just a few simple numbers.

- Defining and Fitting the Model

We can now define our model by creating a MultipleLinearRegression object. In this example we name the multiple linear regression model "linReg" but you can name it anything you want. Once the model has been defined we can "fit" the model to our dataset. This is the step that actually computes the line and finds the optimal parameters using the normal equations.

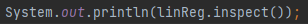

- Inspecting the Fit Model

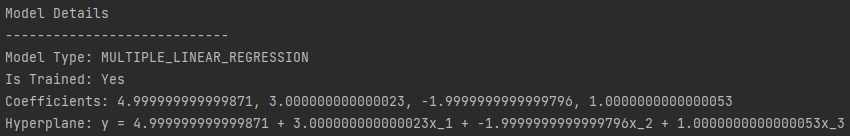

If you want to view the details of the model, including the computed parameters of the line, you can call model_name.inspect(). Below you can see the code for this and the resulting output.

- Making Predictions

Now that the multiple linear regression model has been fit, we can feed in new data and see what the model predicts the output to be. First, lets define a new array called test that contains the data we want to make predictions on.

Now lets make predictions on this data. We make sure to store the results in a new array as well.

Finally, lets look at the result of our models predictions on this testing dataset.

- The Whole Thing

For convenience, here is the full code.